TLDR

- Researchers from Microsoft recently released a paper "Textbooks are all you Need II" creating a new 1.3 billion parameter model named Phi-1.5 with performance on natural language tasks comparable to models 5x larger.

- I explore the paper's claims and find that the model performs significantly worse than equal-sized counterparts when evaluated on perplexity. My investigation highlights how comparisons in the paper, while impressive, paint an incomplete picture (comparing apples to oranges).

- In order to evaluate beyond perplexity, yet circumvent inadvertent gaming of benchmarks, I also created a new task of "slang" understanding and found that a model of similar size performs 40% better than the Phi-1.5 model.

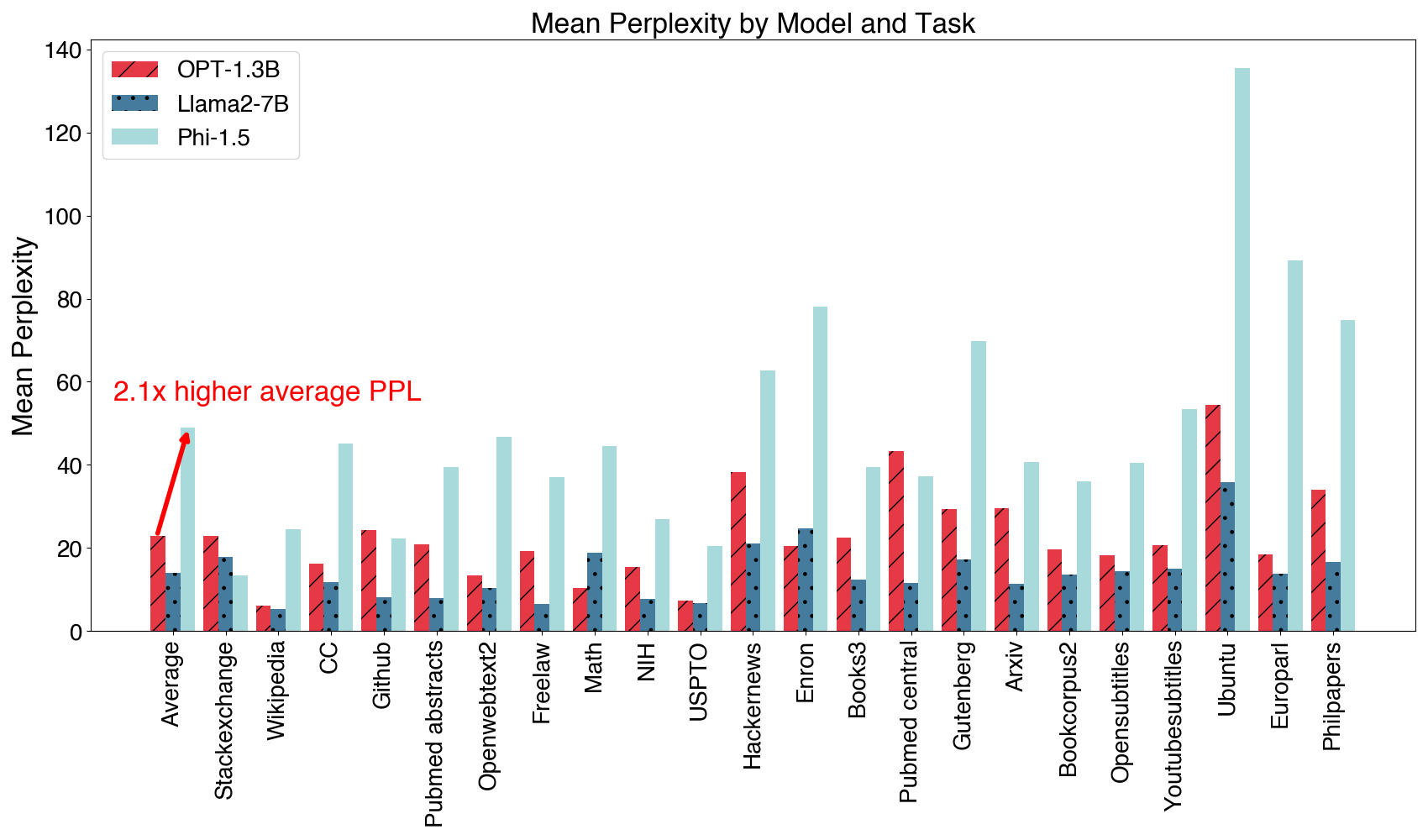

Figure 1: Perplexity of OPT-1.3B, LLAMA2-7B and Phi-1.5B models on various domains of the PILE. The Phi-1.5 model performs 2x worse than the similarly sized OPT model across all domains. It performs better only on Stackexchange (which is part of its training data) and Github.

Summary of the Paper

- Microsoft introduced Phi-1.5, a 1.3 billion parameter LLM, predominantly trained on 30 billion tokens of synthetic "textbook-like" data.

- The model's performance on common sense reasoning, and question answering benchmarks is impressive, rivaling models with 5-10 times its size.

- The paper positions “textbook quality” data as a way to enhance the learning process compared to traditional web data.

- The training concentrated on imparting common sense and broad world knowledge, rather than on web scraped data except for some filtered code data.

- Phi-1.5 demonstrated competencies in multi-step reasoning, elementary coding, and even displayed rudimentary in-context learning abilities.

Twitter Controversy

Susan Zhang's tweets have ignited discussions on Phi-1.5's benchmark results, hypothesizing that the model might have inadvertently trained on benchmark datasets. Zhang presents instances where the Phi-1.5 flawlessly solves math problems when aligned with the benchmark dataset's formatting but fumbles with slight format variations. This pattern, she argues, suggests data memorization over genuine comprehension. The call is that Microsoft should unveil the Phi models' training datasets to verify the benchmark assertions. The authors have responded to these claims, and I will not delve into this debate. The discussion in this blog points to a more concerning, yet nuanced issue.

My explorations

The Curious Case of Data Diversity

The Phi-1.5 model's data diversity remains shrouded in ambiguity (Section 2.2).

I am particularly concerened about the authors' comments about "intricate iterations, strategic topic selection, and a deep understanding of knowledge gaps" because they can inadvertently lead to gaming of benchmarks.

- Notably, the authors mention that they carefully selected 20k topics to enforce data diversity.

- At other places (such as huggingface model card), the authors mention "Given the nature of the training data, phi-1.5 is best suited for prompts using the QA format, the chat format, and the code format."

- The authors go on to say, "It requires intricate iterations, strategic topic selection, and a deep understanding of knowledge gaps to ensure quality and diversity of the data."

- In Section 2.4, the authors remark in bold: None of our models have undergone instruction finetuning or RLHF.

I am particularly concerened about the authors' comments about "intricate iterations, strategic topic selection, and a deep understanding of knowledge gaps" because they can inadvertently lead to gaming of benchmarks.

Perplexing Perplexities

The results in this section made me wonder if Phi-1.5 should be pitted as a "language model" in the first place. There is no doubt that Phi-1.5 is a very valuable model, and one of the most impressive advances in LLM literature we have had in the last year. However, it is also important to understand what are the model's capabilities and what are its limitations.

Phi-1.5 is presented as an LLM. So let us evaluate its performance on language modeling by measuring the perplexity on multiple domains of the PILE dataset. Of the various domains in the PILE dataset, except for Stackexchange and Github, the Phi-1.5 model performs significantly worse than the OPT-1.3B model. On average across all domains, its average perplexity is 2.1x worse than OPT-1.3B, and 3.5x worse than LLAMA2-7B. Notably, Stackexchange constituted an important part of the training data of Phi-1.5, so the lower perplexity on code data is understandable.

What does this mean? While Phi-1.5 is impressive at knowledge and common sense tasks, it is much weaker "language model". Is this a good thing or a bad thing? It depends on what you are looking for. If you are looking for a model that can answer questions, Phi-1.5 is a great model. If you are looking for a model that can generate text, Phi-1.5 is probably not a good model. What does evaluation on perplexity even mean? Is this something of importance? I think it is. Perplexity is a measure of how well a model can predict the next word in a sequence. It is a measure of how well the model has learned the "language". Evaluations on PPL have been the standard for evaluating language models for years. But is this relevant today? It is for the community to decide. But my point here is that this is a side of the model that has been completely ignored in the paper and must be known to researchers who are forming their opinion on the "importance of data" for pre-training.

Phi-1.5 is presented as an LLM. So let us evaluate its performance on language modeling by measuring the perplexity on multiple domains of the PILE dataset. Of the various domains in the PILE dataset, except for Stackexchange and Github, the Phi-1.5 model performs significantly worse than the OPT-1.3B model. On average across all domains, its average perplexity is 2.1x worse than OPT-1.3B, and 3.5x worse than LLAMA2-7B. Notably, Stackexchange constituted an important part of the training data of Phi-1.5, so the lower perplexity on code data is understandable.

What does this mean? While Phi-1.5 is impressive at knowledge and common sense tasks, it is much weaker "language model". Is this a good thing or a bad thing? It depends on what you are looking for. If you are looking for a model that can answer questions, Phi-1.5 is a great model. If you are looking for a model that can generate text, Phi-1.5 is probably not a good model. What does evaluation on perplexity even mean? Is this something of importance? I think it is. Perplexity is a measure of how well a model can predict the next word in a sequence. It is a measure of how well the model has learned the "language". Evaluations on PPL have been the standard for evaluating language models for years. But is this relevant today? It is for the community to decide. But my point here is that this is a side of the model that has been completely ignored in the paper and must be known to researchers who are forming their opinion on the "importance of data" for pre-training.

A new dataset of Slangs

One might argue that comparing perplexity of Phi-1.5 on real data is unfair because it was trained to model a synthetic distribution. I agree this is unfair in a similar way as is evaluating OPT on zero-shot benchmarks.

But what if we created a new synthetic dataset and evaluated Phi-1.5 model? Would this be fair?

I created a new dataset of 120 slangs using GPT-4 (this should be home turf for the Phi-1.5 models). The "question" tag comprised of a sentence such as "In internet lingo, 'GTG' means". The "answer" tag would comprise of their meanings such as "got to go.". I tested the Phi-1.5 annd Facon-RW-1B models by measuring their BLEU scores on the completions. The BLEU score for Facon-RW-1B (0.228) was 40% more than that of Phi-1.5 (0.158). This highlights the importance of "topic selection" in the "Textbooks are all you Need" paradigm. This dataset is available here for future use.

{"question": "When someone online mentions 'kudos', they are", "answer": "giving praise or recognition."}

{"question": "In digital forums, 'AFAIK' stands for", "answer": "as far as I know."}

{"question": "The term 'OOMF' commonly refers to", "answer": "one of my followers or friends."}

{"question": "On the internet, 'ICYM' is a shorter way to say", "answer": "in case you missed."}

{"question": "If someone online refers to their 'BFF', they're talking about their", "answer": "best friend forever."}

{"question": "In web discussions, the acronym 'QOTD' stands for", "answer": "quote of the day."}

{"question": "The online term 'FTW' is an acronym for", "answer": "for the win."}

Evaluations and their Significance

- Topic Selection: The common sense reasoning, language understanding and knowledge tasks have data from specific topics. If I already had knowledge of these topics, I could potentially ask a language model to generate synthetic data for these topics. The model would then be able to answer questions about these topics. This is not a fair evaluation of the model's capabilities and this concern is much more nuanced than the prevailing twitter controversy about "test set leakage". I am not claiming that this is what the authors did, but this may have been a side effect of selecting 20k topics. This makes me wonder what is even the significance of these "zero-shot evaluation" benchmarks when you can curate the topics to be evaluated on. Indeed, Textbooks are all you need! if you know which exam you are preparing for.

- Instruction Following: The authors repeatedly claim that the model gains capabilities for instruction following despite never being explicitly instruction-tuned. However, if the model was pre-trained on synthetic data that was potentially curated to be in the form of instructions, what do these claims even mean? Performance on zero-shot benchmarks can be significantly improved if the model has seen how to follow instructions in pre-training/fine-tuning. But such an opportunity was never given to OPT-1.3B. If evaluated on perplexity, OPT-1.3B model appears to be a significantly more potent model than Phi-1.5. It is possible that OPT models will be more potent than Phi-1.5 models if given this opportunity.

In Closing

While the Phi-1.5 model is undeniably a monumental stride in AI, the context of its benchmarks, training, and evaluations necessitates discernment.

The authors provide no information about "topic selection" or "dataset construction" in this paper.

It is possible that the pre-training data of Phi-1.5 models already had data in the form of instructions, questions, and answers. Further, it may have had data in very large proportions on topics pertaining to the benchmark test sets. This is concerning and a nuance that can be easily ignored.

As we proceed, our evaluations and benchmarks must evolve in tandem, ensuring we compare apples to apples, not oranges. Kudos to the authors for their commendable work and the spirit of open-source, which made my evaluations possible.

Citation

If you found this post useful and wish to reference it in your work, you may use the following BibTeX citation:

@article {maini_phi_1_5,

title ={Phi-1.5 Model: A Case of Comparing Apples to Oranges?},

author ={Maini, Pratyush},

year ={2023},

url ={https://pratyushmaini.github.io/phi-1_5/}

}